Now vless acts nearly as less with syntax highlighting. Got another path before, you have to edit this command appropriately. Now execute sudo ln -s /usr/share/vim/vim62/macros/less.sh /usr/bin/vless. Thanks I started with Experts Exchange in 2004 and its been a mainstay of my professional computing life since. On my MacBook this is /usr/share/vim/vim62. O -output-documentFILE write documents to FILE.

#Wget to stdout mac os x#

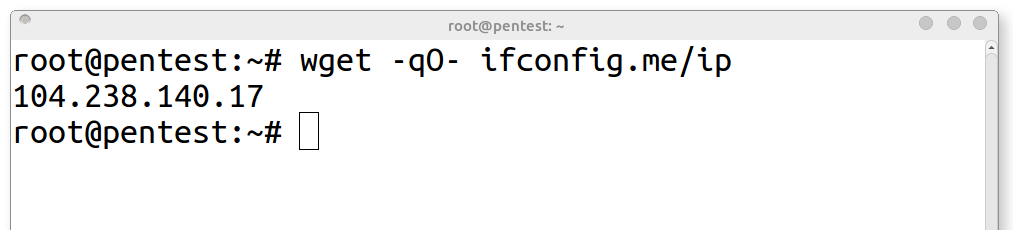

To use this script on Mac OS X Tiger, you just have to follow these simple steps: -O, -stdout Write the file that is being downloaded to standard output. There’s even a way to get syntax highlighting! If you’ve installed a reasonably up-to-date version of vi(m), there is a script called less.sh in your $VIMRUNTIME directory, which acts as an replacement of less providing syntax highlighting. In this example well load data using the World Bank API described. And really, now it works and it’s a very simple way to look at my RSS feeds: This way both STDOUT (1) and STDERR (2) are directed to /dev/null and all output of the cronned command is suppressed. If you use wget, you need to add some additional commands to retarget it from file to stdout. Having a nice command line at hand, there should be an easy way to look at an RSS feed, but wget displays just some crap, if I redirect its output to STDOUT. When passed -O-, it pipes the downloaded file to. When passed -O filename, it keeps appending to filename and reparses the whole file after each download, loading it completely in memory (or mapping it).

#Wget to stdout how to#

(I’m sure this is a feature I’ve activated some time ago, but have no idea how to switch it off.) wget cannot recurse if it does not store the downloaded files, at least temporarily as for -spider. Its not using stderr either I tried 2>&1 and 3>&1. If I do the following wget -qO- > foo the output goes to the screen and 'foo' ends up as a zero-byte file. The -o /dev/null is only necessary if you truly dont care about errors, since without that errors will be written to stderr (while the file is written to stdout). Everything seems to be fine, except that wget doesnt direct output to stdout correctly. ignore what it usually prints, and instead print the. Wow, even Firefox tries to be smarter than me and redirects me to my Bloglines account. Im using the GetGnuWin32-0.6.3.exe package on Windows 7 64-bit. I wanted the thing that is wget -ed to go to a stdout, and the normal stdout to go to null (i.e.

But any up-to-date computer geek has more than one browser installed, so I fired up Firefox and tried to access the feed. Of course this is useful for most of the Safari users, but it can get in your way when you try to develop a RSS based web application.

Safari shows me a very nice HTMLified version of the feed. It can be instructed to instead save that data into a local file, using the -output or.

#Wget to stdout code#

Today I tried to examine the source code of some RSS feeds. If not told otherwise, curl writes the received data to stdout. Sometimes the simplest looking tasks can become complicated, especially when modern computers are involved. Redirecting wget to STDOUT – now with Syntax Highlighting

0 kommentar(er)

0 kommentar(er)